Porting Graph::Easy to TypeScript with GPT-5.2 and Azad

+-------------+ +----------------+ +--------------+

| Graph::Easy | --> | GPT-5.2 + Azad | --> | GraphEasy.ts |

+-------------+ +----------------+ +--------------+

On December 24th, I saw this blog on Hacker News. The author details their multiple LLM-assisted attempts to port the Graph::Easy library from Perl to TypeScript. Graph::Easy is a 23,000 loc library for rendering graphs to a variety of formats. It’s somewhat unique in its features and for the most part hasn’t been updated in 15 years.

ChatGPT says this port would cost somewhere between $100k-200k and 1,000 developer hours for a “near full parity port”. Maybe a little high, but the right ballpark. So what can Azad + GPT-5.2 do? (Not much of a spoiler given the publish date of this post, it can do quite a bit better)

The graphs rendered by Graph::Easy are beautiful, so it seemed like a fun challenge.

The Challenge

Can LLMs port this large library autonomously to a new language? I use these tools a lot, and they’ve grown immensely in their long-context instruction following. For a task of this scope, it is obvious that a single context window (even 1 million tokens) is not sufficient (Spoiler Alert: 52 tasks worth of context window and ~250 million tokens for this task). The model also needs to be able to work autonomously and preserve its knowledge and state as it moves through large contexts. A “greenfield” implementation of a graph library like this would be a lot easier for LLMs. I think even something like GPT-4 would produce a reasonable library over a few iterations. That’s not what I’m trying to do here, though. A feature-for-feature port of a large library is a different class of task.

GPT-5.2 was released recently and it ticks these boxes well. In my experience, GPT-5.2 (and especially the -heavy and -max reasoning levels) has the best autonomy. It is also able to maintain coherence at high context length (>200k).

I’ve been using Azad a lot with GPT-5.2, and I even hacked on some features recently, so I chose it to pair with GPT-5.2. Azad is an agentic coding tool built with a strong focus on the latest models and autonomous coding.

I chose to stick with a short, clear prompt (and not just because it was Christmas Eve!):

Your task is to port the graph-easy-0.76 library to TypeScript.

Look at the structure of the Perl library and consider how to port that structure to your TypeScript version.

Consider carefully the requirements

@Graph-Easy-0.76/examples/as_ascii has a set of examples to run. You will also port these examples @Graph-Easy-0.76/examples_output has the canonical output for each example (as well as its stderr, but you only have to look at that when it is useful).

You will use these example outputs as your source of truth and your completion criteria.

Your TypeScript library + your TypeScript examples/as_ascii must match the output of the Perl version *exactly*. I would recommend writing a helper script that will run your version and compare it to the canonical output.

Now that you understand the task, the success criteria, and how to test, it's your turn. Take over! Let me know when you're done

There’s no prompt hacking/engineering. I didn’t do a long exploration or planning stage. In my experience, small clear instructions and a way to evaluate progress are all you need with the right model/harness.

If you just want to check out the results, here’s the webpage, which renders with the TypeScript port and the original library (via WebPerl), and compares them:

Here’s the source code:

https://github.com/fairfieldt/graph-easy-ts/

and a rendered version of the Azad task that did all the work. This link will load a 50mb task file, be warned (and there are some smaller tasks inlined further down the page; feel free to disable JavaScript though you’ll miss a lot of context):

Background

Graph::Easy is a Perl library for rendering graphs. I haven’t written more than a couple hundred lines of Perl in my life, and most of those look like perl -pe 's/foo/bar/g' file.txt.

The original blog post was trying to get the ascii generator working. Their first attempt, using Claude, successfully ported it to the web using WebPerl. Then the author tried to do a native TypeScript port, first using Claude Code and then a variety of other tools. The results were not quite what they had hoped. Check out the blog for details, definitely worth a read.

I decided a reasonable goal was getting the ascii output examples 1:1 with the original Perl, which is the basic goal of the original author. If I were doing this port by hand, now I’d stop once I had reasonable looking graphs coming out, even if they weren’t exact matches. GPT-5.2 will have no such problem, and as long as it has a good way to evaluate the results, it’ll keep going till it’s 100%.

So, Let’s Go!

In the (holiday) spirit of the challenge, I started off with a task to download the original library and run the examples:

Embedded task transcripts are interactive. You can scroll, expand thinking blocks, and inspect tool results.

Astute readers will notice that I was wrong: examples/as_ascii is an example script, not the inputs. To the consternation of Charles Babbage, Azad took the wrong figures and gave the correct answer anyway.

With step 1 done, I started a new task:

Sounds about right.

Time to Wait

After that I just let things run. I neglected to take the lesson from Simon Willison about having the agent periodically commit and push so I could watch progress, so a couple of times I asked Azad how things were going. That was the extent of my intervention.

The first couple of hours were a lot of thinking, a lot of reading Perl code, and a lot of failing tests.

The next morning we were down to 9 tests failing. All of the remaining failures were .dot or .gdl input, which is a different format from the .txt (Graph::Easy syntax) input of the rest. All of the .txt tests were passing.

At this point, I left that task running and started a 3rd in an isolated worktree.

In about 15 minutes, plus a little back and forth as I asked for more features, Azad came back with Graph Easy TS.

Finished!

Azad kept working, and the number of failing tests kept falling.

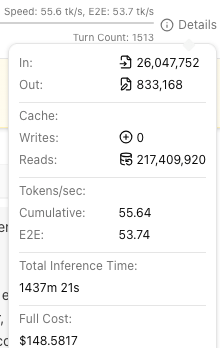

After just under 24 hours, ~250 million tokens, and $148.58, all the tests passed! The rendered graphs match identically.

Azad kept working, and the number of failing tests kept falling.

After just under 24 hours, ~250 million tokens, and $148.58, all the tests passed! The rendered graphs match identically.

Still missing: the other output formats. At this point it was 2pm on Christmas day, so I sent a minimal prompt to implement them.

That took another chunk of time, but eventually all of those tests went green too.

The End

So what are the lessons learned here? If you give modern LLMs a solid harness and a way to verify their progress, they can do a lot. I didn’t have to look at a single line of code, send a debugging prompt, or do any manual wrangling. The result is useful, and forms a solid base to improve. I’m sure there are still edge cases out there to collect and fix. For $150, Azad + GPT-5.2 produced a solid port. If I wanted to wait 48 hours instead of 24, I could have used the Flex tier and cut the cost in half. If I paid an engineer to do this port it would have been 1-2 orders of magnitude more expensive.

GPT 5.2 again and again showed spatial reasoning as it worked through the test cases. It built a test harness based on diff and was pretty good at reasoning about diff output -> graph layout issues.

The Perl -> TypeScript is not a 1:1 mapping and sometimes quite far from that. Azad + GPT 5.2 were able to reason about the actual behavior of the Perl code and convert it to fairly idiomatic TypeScript. There are lots of comments like the one below. They are quite helpful the first time you read the source.

GPT-5.2 especially, and also the other models of this class (Gemini 3.0, Opus 4.5) make something clear: coding ability, long context comprehension, and task handoff are solved problems. The real frontier is how humans can better specify their tasks and requirements, both more quickly and more completely. This is partly a skill (and familiarity) issue, but even more a tools issue. 2026 may be the year of true AI-assisted spec creation. I’d love to talk to people who are thinking about and working on this! Send me a message: t@fairfieldt.com.